GitLab: fixing the "could not retrieve the needed artifacts" error

The error

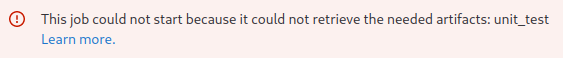

When deploying your application from GitLab, you might run into the following error:

You’ll often see this error in the following scenario:

- You run your deployment pipeline, which includes a testing/linting/static analysis/… step that produces some kind of artifact that is configured to expire at some point.

- Your deployment pipeline runs after the unit tests.

- After the artifact expiry date, for whatever reason, you want to re-run the deployment job.

- GitLab shows you an error, that the job can’t run because it depends on an artifact that’s no longer present: “This job could not start because it could not retrieve the needed artifacts”.

- You have to run the testing/linting/static analysis/… job first. Only then can the deployment job run again.

The default artifact setup

By default, every job in your pipeline will fetch all artifacts from the jobs that preceeded it in the pipeline. That’s why in the example above, the deployment job gets blocked by some artifacts that it doesn’t actually need. Luckily, there are 2 GitLab Yaml keywords that control the artifact fetching behavior: dependencies and needs.

With dependencies: you can list explicitly which jobs you want to fetch artifacts from. If you don’t want any artifacts at all, you can specify an empty list:

deployment_job:

stage: deployment

dependencies: [] # Don't download any artifacts

script:

- echo "Deploying application..."

- echo "Done!"

The needs: keyword on the other hand, lets you execute jobs out-of-order. Normally, jobs run in the stage you assign them to. With needs:, however, it’s possible to create a DAG that lets you execute jobs immediately once another job is done. In the example below, the upload_test_results job won’t start until the unit_test job ran, despite both of them being in the same stage.

unit_test:

stage: test

script:

- echo "All tests passed!" > test_results.txt

artifacts:

paths: ["test_results.txt"]

expire_in: 5 minutes

upload_test_results:

stage: test

needs: [unit_test]

script:

- echo test_results.txt

- echo "Uploading test results to bucket"

Interplay between dependencies: and needs:

We can fix our initial problem by specifying dependencies: [] in our deployment job(s). Unfortunately, when jobs do depend on other jobs and their artifacts, specifying an empty dependencies list will stop any artifact fetching from those “needed” jobs as well. Let’s look at an example of some jobs that clean up a test environment after it’s not needed anymore.

In this first configuration version, we run into the initial problem. Once the test_results.txt artifact expires, we won’t be able to run fetch_load_balancer_ip or deregister_test_env_dns_record anymore until we re-run the unit_test step.

unit_test:

stage: test

script:

- echo "All tests passed!" > test_results.txt

artifacts:

paths: ["test_results.txt"]

expire_in: 5 minutes

fetch_load_balancer_ip:

stage: deploy

script:

- "LB_IP=192.168.0.5" # Let's pretend this is fetched by some command

- echo "LB_IP=$LB_IP" > lb_ip.env

artifacts:

reports:

dotenv: lb_ip.env

deregister_test_env_dns_record:

stage: deploy

needs: [fetch_load_balancer_ip]

script:

- echo "Deregistering DNS record for environment at IP $LB_IP"

Let’s try fixing that by adding dependencies: [] to our cleanup jobs.

unit_test:

stage: test

script:

- echo "All tests passed!" > test_results.txt

artifacts:

paths: [test_results.txt]

expire_in: 5 minutes

fetch_load_balancer_ip:

stage: deploy

dependencies: [] # Don't fetch any artifacts

script:

- "LB_IP=192.168.0.5" # Let's pretend this is fetched by some command

- echo "LB_IP=$LB_IP" > lb_ip.env

artifacts:

reports:

dotenv: lb_ip.env

deregister_test_env_dns_record:

stage: deploy

dependencies: [] # Don't fetch any artifacts

needs: [fetch_load_balancer_ip]

script:

- echo "Deregistering DNS record for environment at IP $LB_IP"

The jobs now run, even when the test artifact is not available anymore. We broke the deregister_test_env_dns_record:job though. It depends on the lb_ip.env file from the fetch_load_balancer_ip: job, but that environment file is no longer fetched.

To fix that, we need to explictly list the dependencies for that job:

unit_test:

stage: test

script:

- echo "All tests passed!" > test_results.txt

artifacts:

paths: ["test_results.txt"]

expire_in: 5 minutes

fetch_load_balancer_ip:

stage: deploy

dependencies: []

script:

- "LB_IP=192.168.0.5" # Let's pretend this is fetched by some command

- echo "LB_IP=$LB_IP" > lb_ip.env

artifacts:

reports:

dotenv: lb_ip.env

deregister_test_env_dns_record:

stage: deploy

dependencies: [fetch_load_balancer_ip] # List the exact dependencies list

needs: [fetch_load_balancer_ip]

script:

- echo "Deregistering DNS record for environment at IP $LB_IP"

That fixed our problem! As a last step, we can simplify this configuration a little bit. In modern GitLab versions, you no longer have to specify both the dependencies: section when you also have a needs: section. That way, you don’t have to specify the same list twice and keep them in sync.

unit_test:

stage: test

script:

- echo "All tests passed!" > test_results.txt

artifacts:

paths: ["test_results.txt"]

expire_in: 5 minutes

fetch_load_balancer_ip:

stage: deploy

dependencies: []

script:

- "LB_IP=192.168.0.5" # Let's pretend this is fetched by some command

- echo "LB_IP=$LB_IP" > lb_ip.env

artifacts:

reports:

dotenv: lb_ip.env

deregister_test_env_dns_record:

stage: deploy

needs: [fetch_load_balancer_ip] # Needs without dependencies list.

script:

- echo "Deregistering DNS record for environment at IP $LB_IP"

If you want to see all of this at work and play around with it, I made an example repository that demonstrates what I wrote about.